Reposted from https://blogs.nasa.gov/mission-ames/2012/07/19/post_1342737399087/.

Ames scientist Kimberly Ennico wrote this blog entry on July 16, 2012 while working on a field test for RESOLVE, the Regolith and Environment Science and Oxygen and Lunar Volatile Extraction. This week you can follow RESOLVE live on Ustream [Note: link was active in 2012].

On this 43rd anniversary of Apollo 11 launch, the moon is on my mind, naturally.

I am a NASA scientist who typically spends days in the office staring at my computer screen, “crunching” data, making plots, analyzing the results and writing up what is being demonstrated by the completed experiment. You know, that scientific method. The experiment could be an observing run at an observatory, a lab-based test or a series of images taken by a space telescope (at some past date) that is sent down to the ground or retrieved from a data archive. Quite frankly, I am often a passive (but always strongly passionate) participant in the advancement of science.

This week, I found myself in the rare moments where I am contributing to the operations of a space mission. In this case, it was a field demonstration of a future lunar rover. For full disclosure, in 2009 I also got the chance to participate in a real space mission with LCROSS, which was an amazing experience. This “field demo” experience is slightly different and provided me with an opportunity to reflect on the power and honest “truth of iteration” and how valuable it is for learning.

First, I need to set the stage. We are now in day four of a seven-day “field demo” of the RESOLVE payload. RESOLVE stands for Regolith and Environment Science & Oxygen and Lunar Volatiles Extraction. Snazzy name, eh? It is a payload designed to be on a movable platform on the moon. Its objectives are to locate areas of high-hydrogen, which is an indicator of possible water ice in the subsurface. After identifying a “hot spot” the rover is commanded to stop and begin initial “augering” with active monitoring for water and other molecular signatures. The augering process takes about an hour and samples down to about 30-50 centimeters (to give us a sneak-peak of what lies beneath). It can drill deeper, but the amount of subsurface material brought up is expected to be limited. If we find something interesting, the drill is put into place to drill down 1 meter, the limit of our initial surface detection methods, and extract “core samples” from a series of depths. Finally, the last leg of this “roving laboratory” is to take each of the “core samples” and place it inside an oven where it is heated to liberate the molecules. To identify what is in the sample, the vapors are collected by a mass spectrometer and gas chromatograph. It is essentially a mini-lab, very similar in approach to the Mars Science Laboratory currently en route to the Red Planet.

Right: The RESOLVE payload on the Artemis Jr. rover (left) and mock-lander (right) during a field demo exercise in Hawaii (Photo from RESOLVE team).

Why are we doing this? Well, not only has LCROSS data indicated the presence of water on the moon, but datasets from NASA’s Lunar Reconnaissance Orbiter, Lunar Prospector, Cassini, Deep Impact and Clementine also point to “significant quantities” of hydrogen-bearing molecules, particularly in permanently shadowed craters near the lunar poles. The form of the hydrogen is widely debated, so there is a need for “ground-truthing.” Lets dig and sample that dirt! At the same time, these hydrogen-bearing molecules could also be extremely useful resources for water, propellant, etc. So in comes a trendy-buzz word in space circles, ISRU, which stands for In-Situ Resource Utilization. Historically when explorers reached new lands on our planet, if you could “live off the land” using items that you found along the way, you considerably extended your exploration journeys because you have to carry everything with you (not to mention the related expenses). Hunting and gathering for food, making shelters from forests, mining local fossil fuels, etc. As we journey to other worlds, we can do the same.

Enter analog-missions. We’re using Hawaii for the moon. Sadly I am not there (my scuba diving urges will just need to wait). I am sitting in the “Science Backroom” here at NASA’s Ames Research Center in Moffett Field, Calif. It is basically a room full of desks, with lots of computers and monitors and headsets to hear audio loops.

Right: Me (left) and Carol Stoker, drill expert and Mars scientist, in the Ames Science Backroom looking over activities. Image credit: Jen Heldmann

Analog missions have been performed for years to validate architecture concepts, conduct technology demonstrations and gain a deeper understanding of system-wide technical and operational challenges. And let me say, on day four, it is doing exactly that. A lot of stops and gos, lots of “oh I wish I could see this or that,” etc. etc.

We have a distributed team, and this is not uncommon for most operations activity. Some are in Hawaii to assist with physical items associated with the rover and payload. At a nearby building in Hawaii, others have access to real-time data for monitoring and control. Supporting control centers are in place in Texas (NASA’s Johnson Space Center in Houston) and Florida (NASA’s Kennedy Space Center), while in California (here at Ames), others support the mission science with access to high-tech visualization tools. We all have our respective roles, but we need to work as an integrated team to achieve success. At the same time, “not all should speak at once” otherwise you talk past each other and you can miss things. So there is a bit of needed protocol at work. In our “science backroom” we talk with our on-site science reps Tony Colaprete & Rick Elphic who are actively monitoring the flight and rover voice-loops, which we may not always be listening in on. Our purpose is to focus on real-time and archival science analysis, because, when you come right down to it, we are exploring. We don’t know what we will find next.

This mission concept also is relying on humans to make decisions at critical junctions, so it is far from being an autonomous machine. And by involving people, you can run into some pretty interesting decision points. We are also test driving (pardon the pun) a new way to integrate our visualization of our data. We have the “typical” live telemetry from the instruments on the rover and their trends. But we are also overlaying in near-real time the instruments’ data and rover position onto a Google map. We are taking advantage of an existing data visualization tool and its properties (layers, grids, pin locations, commentary, etc.). And we are linking the photos from the on-board rover cameras to the Google maps just like people do with their holiday pictures. It is actually rather elegant, but also needs some tweaks.

Above: (Left) Screengrab of one of our visualization tools of a traverse-trek with data from the neutron spectrometer colorized to indicate signal strength. The green line shows the planned path; red line shows the actual path. Shown here is the trace from a “hotspot localization” routine so we can pinpoint the source further for analysis. (Right) Same scene but this time overlaid are locations of each of our rover-camera images, with one shown as an example.

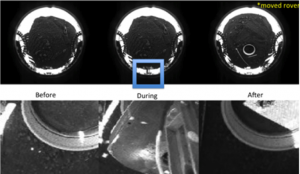

Right: Examples of rover images before, during, and after a drilling exercise from (top) a fish-eye lens camera in the rover underbelly and (bottom) a camera that is coincident with the near infrared spectrometer field of view.

We are learning as we go. Each day we have a series of tasks to complete whether it is a number of meters to traverse, or completing the drilling of multiple sites, etc. Timeline is extremely important since in a real flight mission; there will always be limited items such as fuel or power. Roving plans are decided ahead of time, but things do not always go according to plan, especially if something we did not expect appears on our data streams or the rover does something unexpected on unexplored terrain. With each unexpected event, we learn.

My personal challenge is keeping up with all the conversations going on, those that address what we are seeing real-time, those that are focused on how we can improve our approach and those all about the real thing — when we do this on the moon.

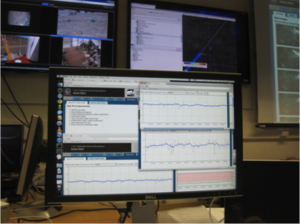

Right: This was my monitor today as I was trying out a new method to look for a water signature, monitoring any correlation between the neutron spectrometer signal and various band depths from the near infrared spectrometer. My requests for a new visualization tool are being incorporated into the system and will be ready for me when I go on console tomorrow.