July 11, 2017

Well, that was a fascinating flight, and very different “feel” to it compared to the infrared astronomy SOFIA flights I have been on these past two weeks. First of all, we took off in the day time, and spend a good fraction of our time with the telescope door closed. Usually our flying observatory takes off at sunset to maximize our time in the dark skies. On Jul 10th we had a much different objective.

We were going to hunt the shadow of a Kuiper Belt Object 2014MU69 passing in front of a 15th magnitude star. It’s projected shadow path on Earth would be quite north of New Zealand and we needed a lot time to get there for the event, with enough fuel to return to Christchurch.

First we needed to get off the ground, and that had been riddled with weather gremlins. There was a forecast of extremely turbulent weather on our north outbound route that we could not fly around nor fly through. We had the possibility of flying under it, but the diversion would take up more fuel than per the nominal plan and there was a risk we might not have enough to get back home. The pilots were able to secure a reading from another pilot in the region who reported the turbulence reality was not that severe. This means we were good to go! However, this info gathering caused a delay of 15 minutes, which the flight plan could tolerate, yet those 15 minutes ate into the book-kept 30 minute margin. We were now to fly with a 15 minute adjustment to hit a point in time and space within 1 second. We were ready!

Catching SOFIA’s shadow after takeoff.

Catching SOFIA’s shadow after takeoff.

Uncommon view from SOFIA, having taken off before sunrise, flying now north of New Zealand’s North Island.

Uncommon view from SOFIA, having taken off before sunrise, flying now north of New Zealand’s North Island.

On the few hour wait for sunset, New Horizons team had time to go over the plan, recheck all the computations, etc. Most importantly they did some time tests to reconcile differences between UTC (Universal Time Coordinated) and GPS time. I had no idea there was a difference. The pilots will fly and adjust their timing using their GPS clock, but all the mission and science planning used UTC. GPS is not perturbed by leap seconds so it slowly drifts ahead of UTC. However GPS timing receivers put in the conversion factor to convert GPS time to UTC. We had to check that was being done. Also during the time check, we also consulted the WWVH, U.S. National Institute of Standards and Technology’s shortwave radio time signal station in Hawaii, on the radio. How best to spend idle time listing to beeps of a radio time signal!

Marc Buie (left), lead scientist who computed the shadow predictions, brings Alan Stern (right), New Horizons mission Principle Investigator up to speed on the latest predictions.

Marc Buie (left), lead scientist who computed the shadow predictions, brings Alan Stern (right), New Horizons mission Principle Investigator up to speed on the latest predictions.

Manuel Wiedemann (left) and Enrico Pfueller (right), our instrument scientists who will operate run the high-speed photometer, get their equipment set up.

Manuel Wiedemann (left) and Enrico Pfueller (right), our instrument scientists who will operate run the high-speed photometer, get their equipment set up.

The flight plan had a short set up leg to confirm the signal to noise on the star, and then a “time holder” to allow for the pilots to speed up and slow down, and then it would be time for the occultation. The event time remained at 07:49:11 UTC with an interception position at Lat 16d24.2m S, Lon 175d2.4m W.

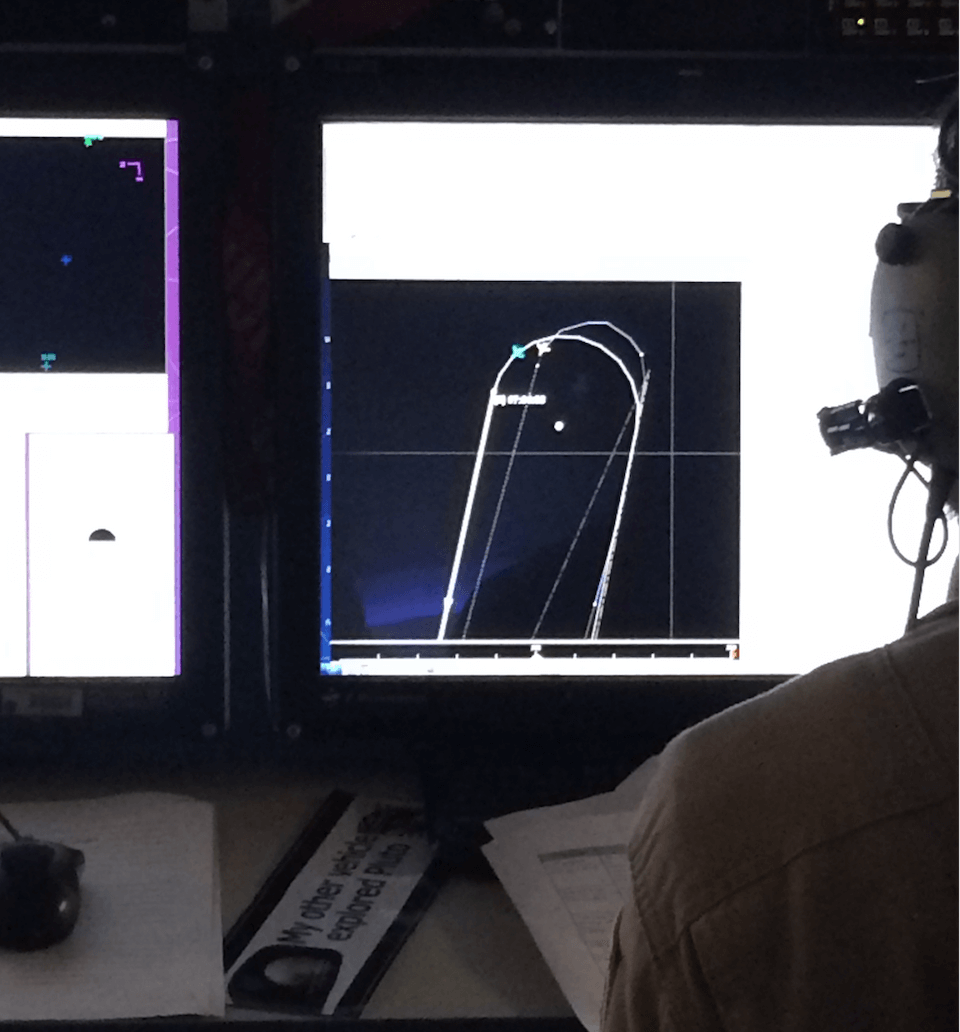

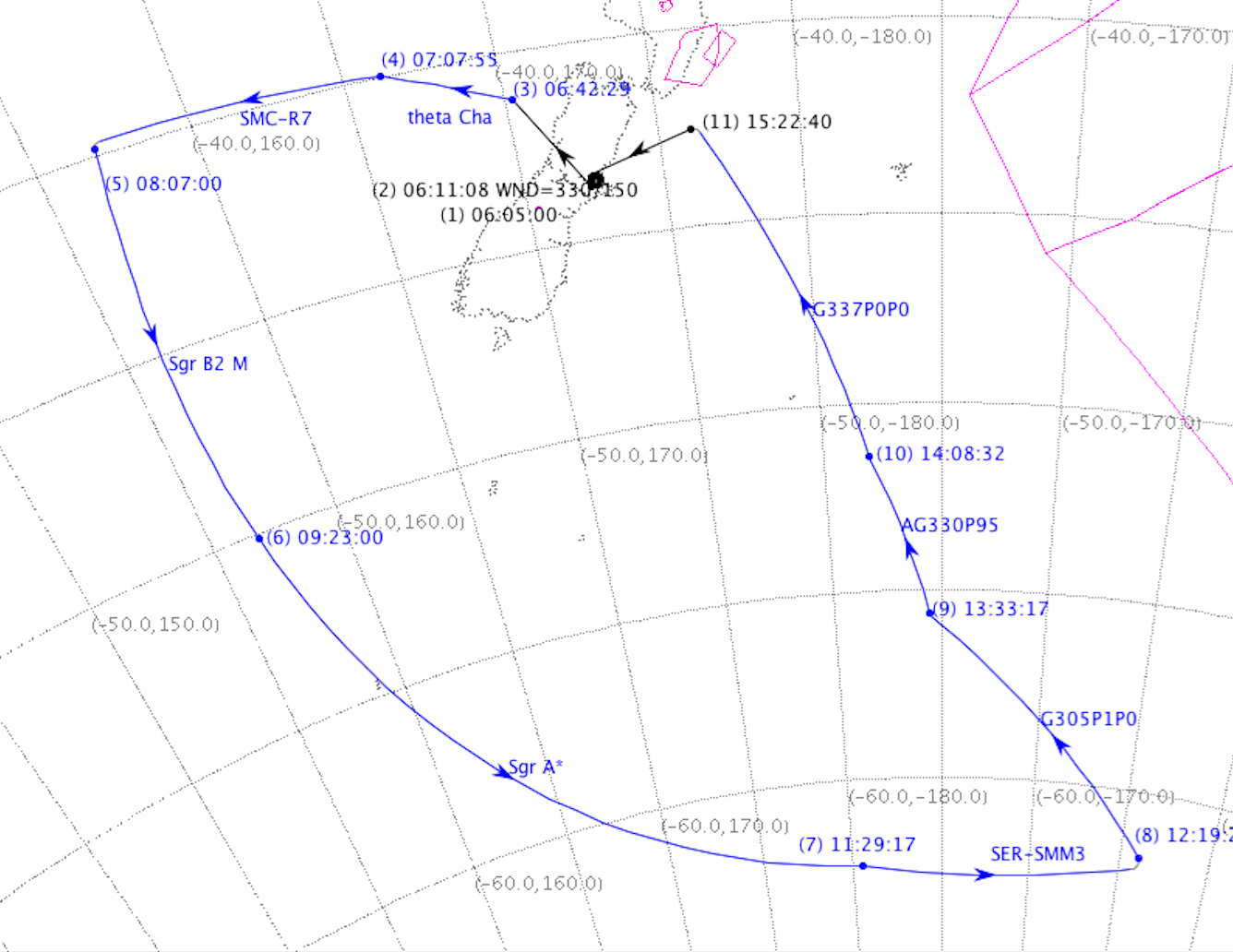

SOFIA flight path for the MU69 occultation flight displayed on the Mission Director’s Console.

SOFIA flight path for the MU69 occultation flight displayed on the Mission Director’s Console.

The Flight Path for this occultation flight had us north of Tahiti and catching the occultation (marked by the anchor) on the return south to north leg.

Sunset finally came.

Sunset finally came.

Once the sun was down, the cavity door was opened, and the telescope began to cool down, we waited anxiously for when we could get a test image on the camera. We had an objective: we learned that on the occultation leg we had the opportunity to place a 9th magnitude star in the same small field of view to help with the data analysis (helps with pixel registration), but we did not know whether it would saturate under the observing conditions.

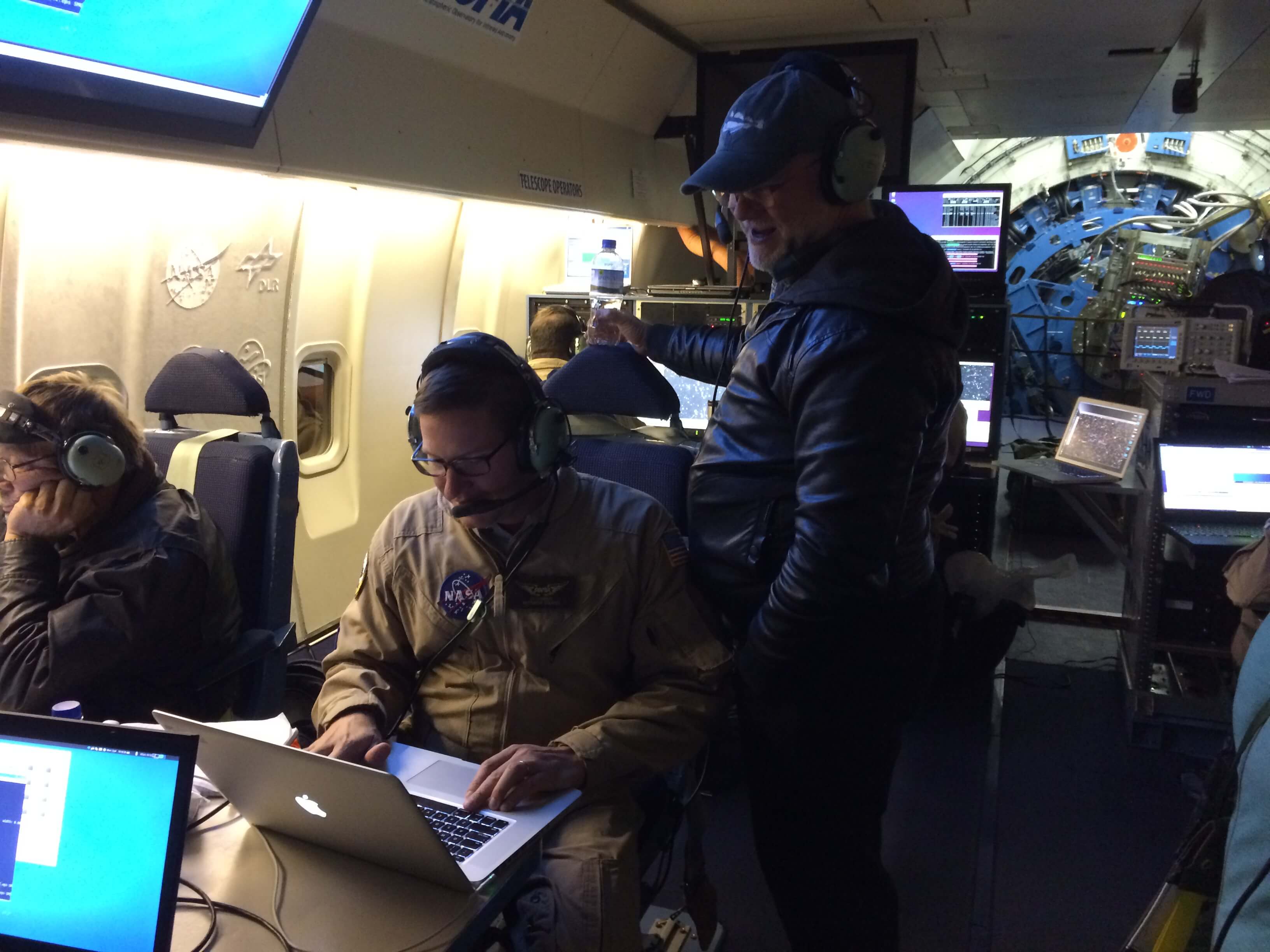

Manuel Wiedemann (seated, left) & Enrico Pfueller (seated, right) table) with Eliot Young (standing) after capturing the first test image and confirmed that the 9th magnitude star did not saturate. We also took a background measurement.

Manuel Wiedemann (seated, left) & Enrico Pfueller (seated, right) table) with Eliot Young (standing) after capturing the first test image and confirmed that the 9th magnitude star did not saturate. We also took a background measurement.

Then it was time for the pilots and Karina Leppik , the mission director, to do their coordination to get the plane to do a 180 degree turn and line us up for a interception at Lat 16d24.2m S, Lon 175d2.4m W at 07:49:11 UTC from 38,000 feet.

The turn into the occultation leg.

The turn into the occultation leg.

Expectant Astronomers. This picture was taken minutes before the event. From left to right, Eliot Young, Simon Porter, Manuel Wiedemann(sitting), Alan Stern, Marc Buie, and Enrico Pfueller(sitting/off screen).

Expectant Astronomers. This picture was taken minutes before the event. From left to right, Eliot Young, Simon Porter, Manuel Wiedemann(sitting), Alan Stern, Marc Buie, and Enrico Pfueller(sitting/off screen).

The size of MU69 is unknown. The Hubble Space Telescope images only provide a visual magnitude. There is a degeneracy whether the object is large and dark, or small and highly reflective, as both combinations can provide the equivalent surface area sun reflectance we measure as a magnitude.

The rough range of this object has a diameter of 10 to 40 km. From a distance of 43.3 AU (or 6.5 billion km) away, the shadow projected on Earth has a size ~ 10-40 km. We wanted to hit the shadow center line. With the shadow moving across the earth at ~90,000 km/hr, the event would last 0.4-1.6 seconds. We were reading the camera at 20 frames per second, so the ‘dip’ would appear in 8 to 32 images.

We did not “see” the event in real time. I think I blinked!

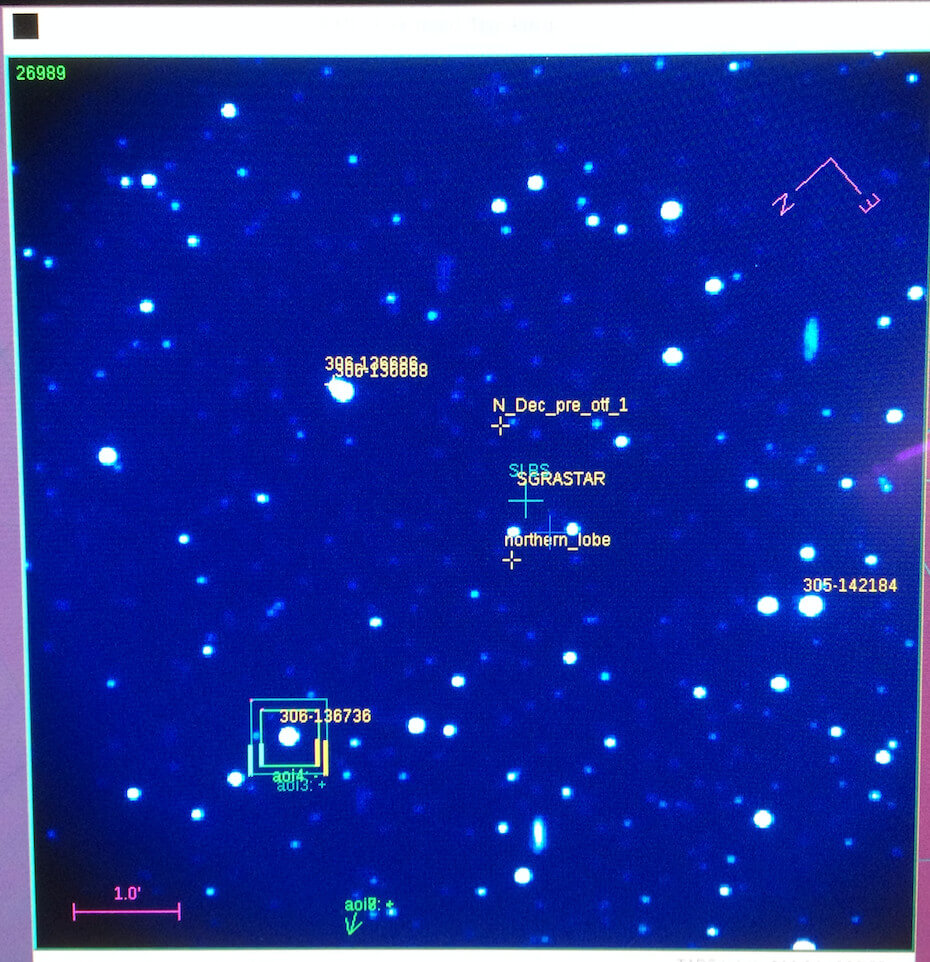

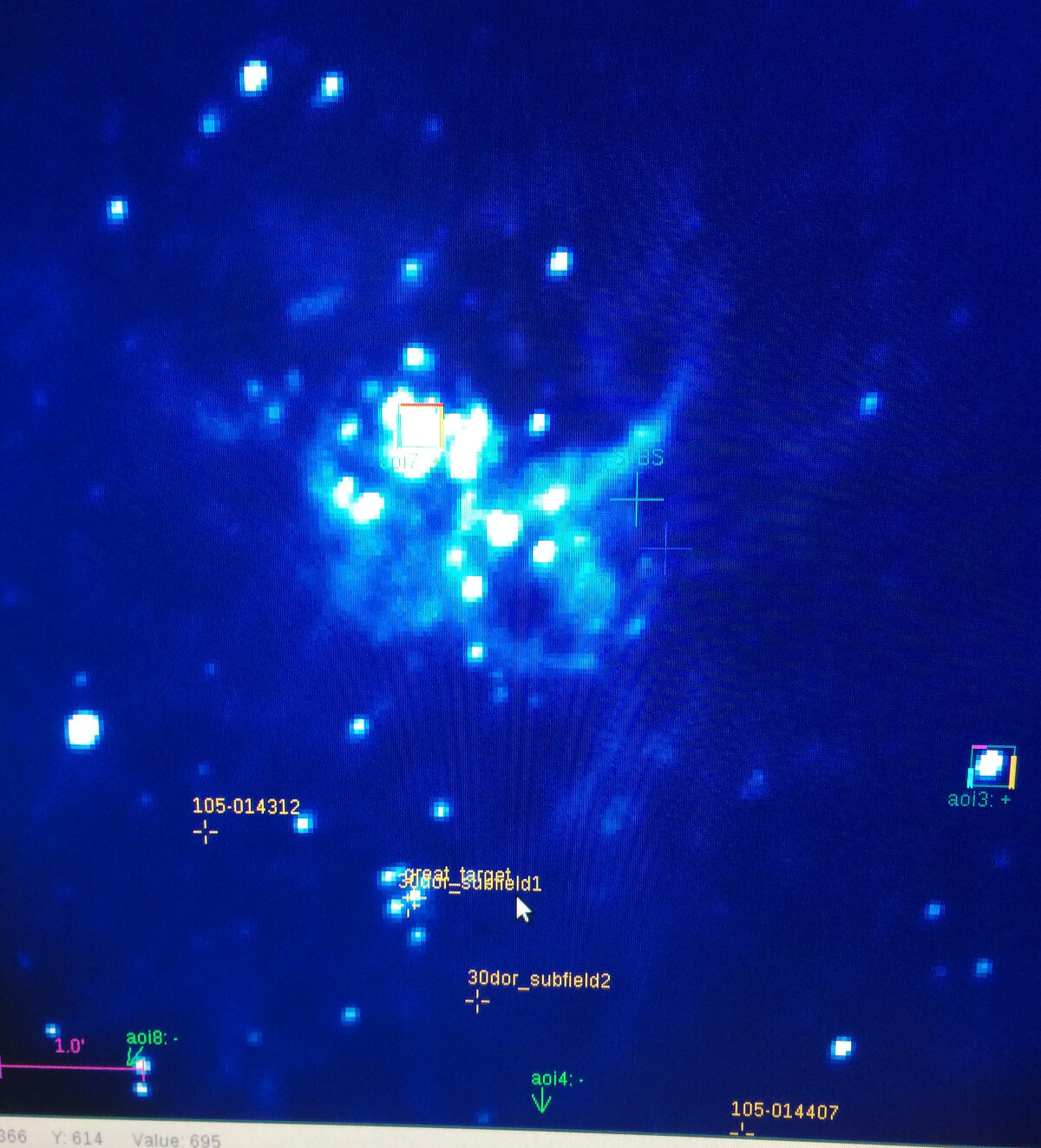

Geometry with the full moon would cause additional challenges for the data. As seen in the full frame image below, which was taken specifically to help with subtracting out the background, the occultation star is the one in the bottom center with the bulls-eye (concentric circles) around it. The other brighter stars (marked with squares) are brighter reference stars. Oh how we wish the MU69 would have passed in front of one of them!

The occultation star is in the bottom middle, marked with the bulls-eye concentric circles. All eyes were on this star during this flight.

The occultation star is in the bottom middle, marked with the bulls-eye concentric circles. All eyes were on this star during this flight.

None the less, over 60,000 frames of images were taken spanning a continuous 50 minutes period centered about this <2 second event and samples the instability region around MU69. No loss of data. The camera did not blink, even if we did.

The flight’s excitement would continue with some telescope testing with simulated turbulence by pilots doing “speed brakes” and then it was watching the fog reports from Christchurch Airport. If the fog did not lift, we may have needed to divert to Auckland. This time those weather gods stayed kind and we landed safely at Christchurch shortly after midnight.

With data in hand, the SwRI scientists deplaned SOFIA, would catch a short nap, and then they would all be off to South America to start preparing for the 3rd of 3 occultations event by this MU69 on July 17th.

In summary, on July 10th, SOFIA delivered its mission and flew to a place in space above the Earth within 10 km and within 1 second of the target point. We had no clouds to deal with, just winds and the full moon. Winds were accommodated by guiding the airplane with heading and speed tweaks. The full moon provided a challenge, yet all the photometry tricks like scattered light images and reference stars, plus the normal bias, darks and flats, have been added to the toolbox.

We hunted.

We did not blink.

Now we wait…

….to learn what the New Horizons team finds.

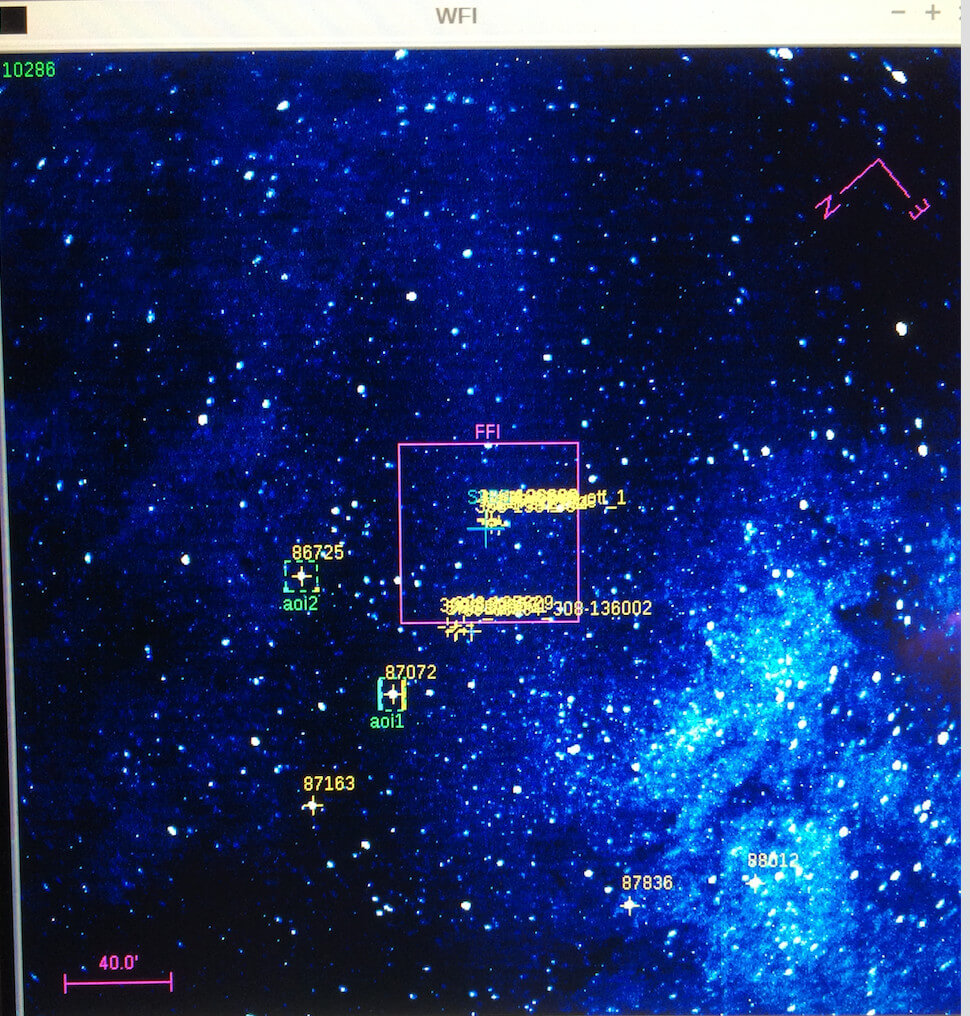

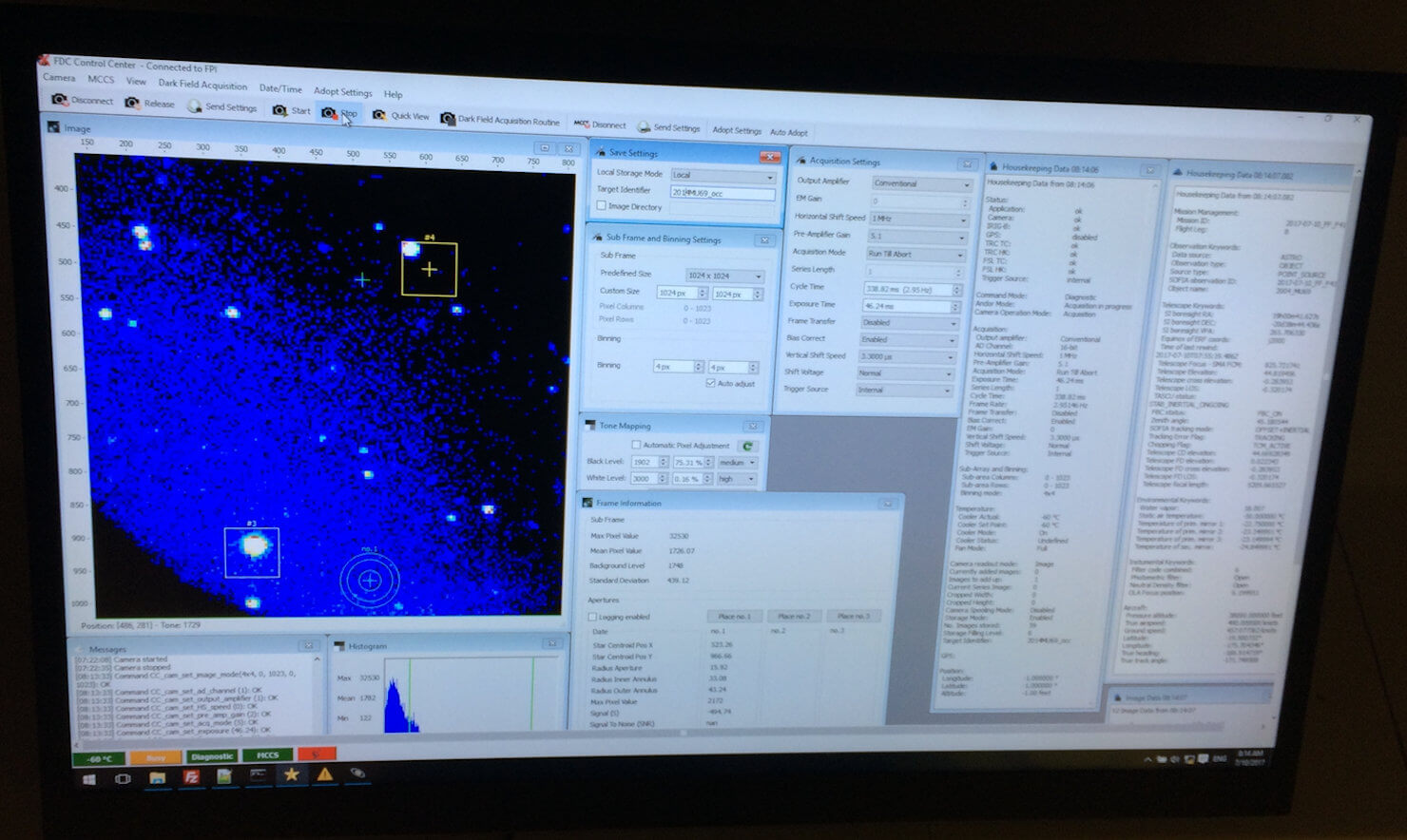

SOFIA’s focal plane imager (visible guide camera) with a 8’x8′ field of view centered on 30Doradus.

SOFIA’s focal plane imager (visible guide camera) with a 8’x8′ field of view centered on 30Doradus.

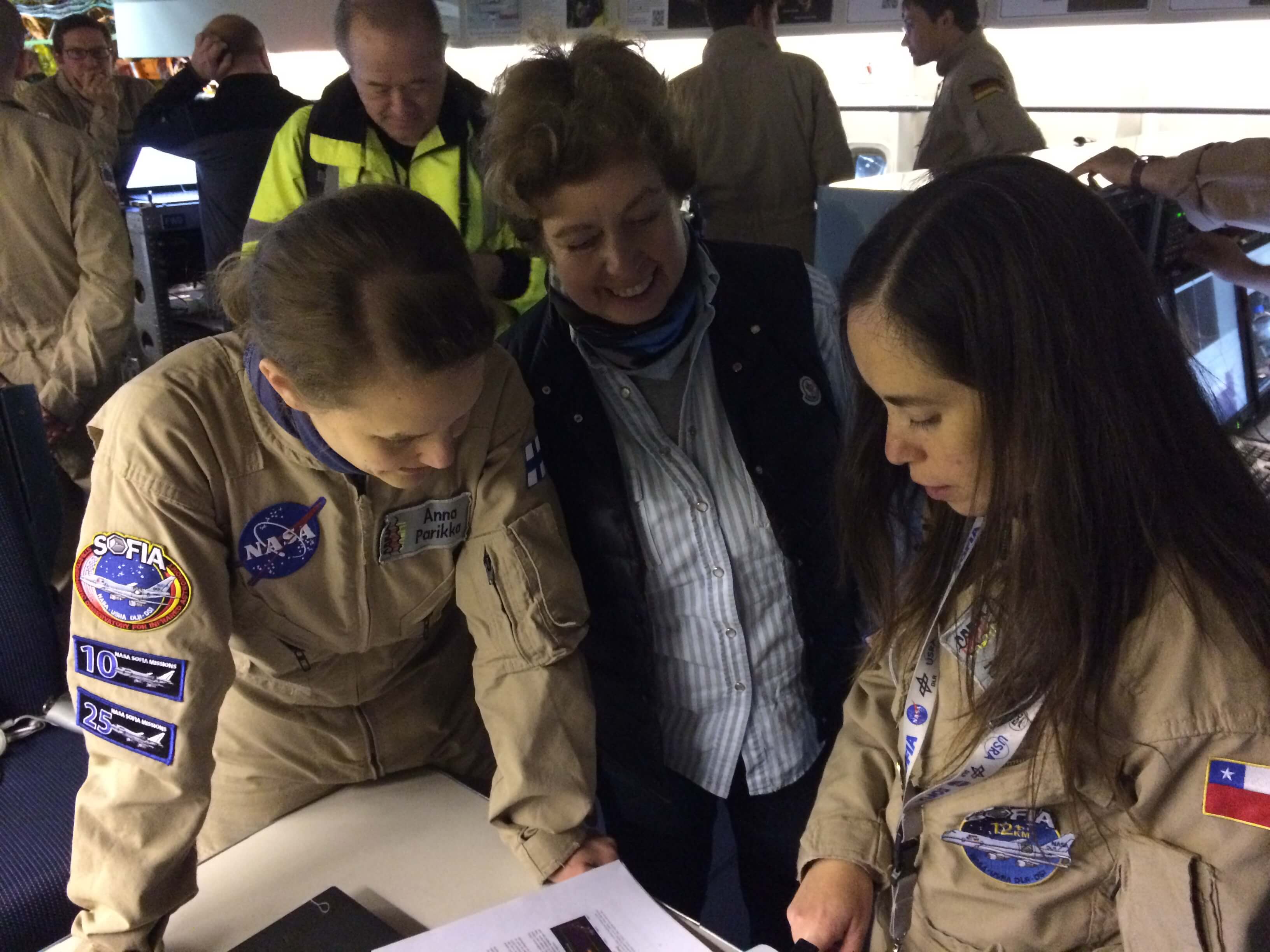

James Jackson (standing) talking strategy with Ed Chambers (seated), instrument scientist.

James Jackson (standing) talking strategy with Ed Chambers (seated), instrument scientist.

Flight Plan for the June28th SOFIA flight.

Flight Plan for the June28th SOFIA flight.